使用AV Foundation 捕捉照片和视频

使用AVFoundation拍照和录制视频的一般步骤如下:

- 创建AVCaptureSession对象。

- 使用AVCaptureDevice的静态方法获得需要使用的设备,例如拍照和录像就需要获得摄像头设备,录音就要获得麦克风设备。

- 利用输入设备AVCaptureDevice初始化AVCaptureDeviceInput对象。

- 初始化输出数据管理对象,如果要拍照就初始化AVCaptureStillImageOutput对象;如果拍摄视频就初始化AVCaptureMovieFileOutput对象。

- 将数据输入对象AVCaptureDeviceInput、数据输出对象AVCaptureOutput添加到媒体会话管理对象AVCaptureSession中。

- 创建视频预览图层AVCaptureVideoPreviewLayer并指定媒体会话,添加图层到显示容器中,调用AVCaptureSession的startRuning方法开始捕获。

- 将捕获的音频或视频数据输出到指定文件。

主要用到的类:

- AVCaptureDeviceInput (输入设备)

- AVCaptureSession (捕捉会话)

- AVCaptureVideoPreviewLayer (展示层)

- AVCaptureOutput (输出)

- AVCaptureStillImageOutput (捕捉图片)

- AVCaptureAudioDataOutput

- AVCaptureVideoDataOutput

- AVCaptureFileOutput

配置会话:

简单配置会话

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

|

_captureSession=[[AVCaptureSession alloc]init];

if ([_captureSession canSetSessionPreset:AVCaptureSessionPreset1280x720]) {

_captureSession.sessionPreset=AVCaptureSessionPreset1280x720;

}

AVCaptureDevice *captureDevice=[self getCameraDeviceWithPosition:AVCaptureDevicePositionBack];

if (!captureDevice) {

NSLog(@"取得后置摄像头时出现问题.");

return;

}

NSError *error=nil;

_captureDeviceInput=[[AVCaptureDeviceInput alloc]initWithDevice:captureDevice error:&error];

if (error) {

NSLog(@"取得设备输入对象时出错,错误原因:%@",error.localizedDescription);

return;

}

_captureStillImageOutput=[[AVCaptureStillImageOutput alloc]init];

NSDictionary *outputSettings = @{AVVideoCodecKey:AVVideoCodecJPEG};

[_captureStillImageOutput setOutputSettings:outputSettings];

if ([_captureSession canAddInput:_captureDeviceInput]) {

[_captureSession addInput:_captureDeviceInput];

}

if ([_captureSession canAddOutput:_captureStillImageOutput]) {

[_captureSession addOutput:_captureStillImageOutput];

}

_captureVideoPreviewLayer=[[AVCaptureVideoPreviewLayer alloc]initWithSession:self.captureSession];

CALayer *layer=self.viewContainer.layer;

layer.masksToBounds=YES;

_captureVideoPreviewLayer.frame=layer.bounds;

_captureVideoPreviewLayer.videoGravity=AVLayerVideoGravityResizeAspectFill;

\[layer addSublayer:_captureVideoPreviewLayer];

|

添加音频捕捉设备(麦克风)

输入不仅可以添加摄像头 ,页可以添加麦克风

1

2

3

4

5

6

7

8

9

10

11

12

13

|

AVCaptureDevice *audioDevice =

[AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeAudio];

AVCaptureDeviceInput *audioInput =

[AVCaptureDeviceInput deviceInputWithDevice:audioDevice error:error];

if (audioInput) {

if ([self.captureSession canAddInput:audioInput]) {

[self.captureSession addInput:audioInput];

}

} else {

return NO;

}

|

添加视频输出

输出不仅可以添加静态图片,可以添加视频输出

1

2

3

4

5

6

|

self.movieOutput = [[AVCaptureMovieFileOutput alloc] init];

if ([self.captureSession canAddOutput:self.movieOutput]) {

[self.captureSession addOutput:self.movieOutput];

}

|

启动或者停止会话

启动会话,使它处于图片和视频捕捉状态。最好不要在主线程中启动。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| - (void)startSession {

if (![self.captureSession isRunning]) {

dispatch_async([self globalQueue], ^{

[self.captureSession startRunning];

});

}

}

- (void)stopSession {

if ([self.captureSession isRunning]) {

dispatch_async([self globalQueue], ^{

[self.captureSession stopRunning];

});

}

}

|

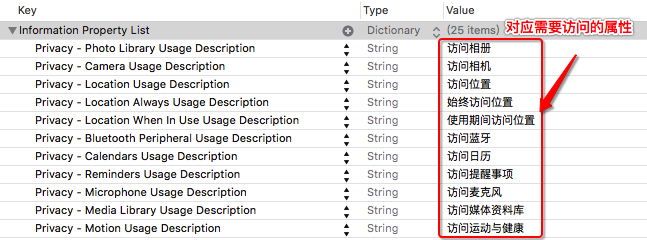

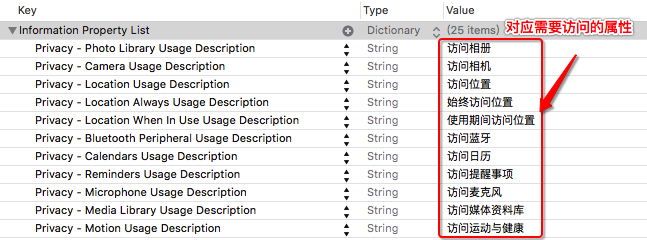

隐私访问权限

xcode8 iOS10中后不添加权限会出错

在info.plist文件添加对应的Key-Value:

聚焦(自动聚焦,手动聚焦)

坐标空间转换

如iPhone5屏幕坐标系左上角为(0,0)垂直时右下角为(320,568),水平时右下角(568,320)。

而设备坐标系基于摄像头的本地设置,左上角为(0,0)右下角为(1,1)。

AVCaptureVideoPreviewLayer定义了两个方法用于转换两者间的坐标:

- (CGPoint)captureDevicePointOfInterestForPoint:(CGPoint)pointInLayer NS_AVAILABLE_IOS(6_0);

- (CGPoint)pointForCaptureDevicePointOfInterest:(CGPoint)captureDevicePointOfInterest NS_AVAILABLE_IOS(6_0);

设置对焦模式

对焦模式AVCaptureFlashMode有 三种 AVCaptureFlashModeOff 、AVCaptureFlashModeOn 和 AVCaptureFlashModeAuto

配置设备时一定要判断一下设备是否支持,比如前置摄像头不支持对焦操作

修改设备配置时需要先锁定配置 lockForConfiguration 执行所需的修改,最后解锁设备 unlockForConfiguration

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| - (void)setFlashMode:(AVCaptureFlashMode)flashMode {

AVCaptureDevice *device = [self activeCamera];

if (device.flashMode != flashMode &&

[device isFlashModeSupported:flashMode]) {

NSError *error;

if ([device lockForConfiguration:&error]) {

device.flashMode = flashMode;

[device unlockForConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

}

}

}

|

点击对焦

将屏幕坐标转换为捕捉设备坐标,然后将 focusPointOfInterest 设置为该坐标

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| #pragma mark - Focus Methods

- (BOOL)cameraSupportsTapToFocus {

return [[self activeCamera] isFocusPointOfInterestSupported];

}

- (void)focusAtPoint:(CGPoint)point {

AVCaptureDevice *device = [self activeCamera];

if (device.isFocusPointOfInterestSupported &&

[device isFocusModeSupported:AVCaptureFocusModeAutoFocus]) {

NSError *error;

if ([device lockForConfiguration:&error]) {

device.focusPointOfInterest = point;

device.focusMode = AVCaptureFocusModeAutoFocus;

[device unlockForConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

}

}

}

|

调整曝光模式

与调整对焦模式类似,可以调整曝光模式 device.exposureMode

AVCaptureExposureModeLocked 锁定曝光 AVCaptureExposureModeAutoExpose 自动曝光 AVCaptureExposureModeContinuousAutoExposure 自动持续曝光 AVCaptureExposureModeCustom 自定义曝光 四个值

调整闪光灯模式

与之前的两个类似

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| - (BOOL)cameraHasTorch {

return [[self activeCamera] hasTorch];

}

- (AVCaptureTorchMode)torchMode {

return [[self activeCamera] torchMode];

}

- (void)setTorchMode:(AVCaptureTorchMode)torchMode {

AVCaptureDevice *device = [self activeCamera];

if (device.torchMode != torchMode &&

[device isTorchModeSupported:torchMode]) {

NSError *error;

if ([device lockForConfiguration:&error]) {

device.torchMode = torchMode;

[device unlockForConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

}

}

}

|

切换摄像头

切换摄像头时担心停止会话和重启会话带来的开销,但是对会话进行改变时,要通过 beginConfiguration 和 commitConfiguration 进行单独的原子性的变化。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

| #pragma mark - Device Configuration

- (AVCaptureDevice *)cameraWithPosition:(AVCaptureDevicePosition)position {

NSArray *devices = [AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo];

for (AVCaptureDevice *device in devices) {

if (device.position == position) {

return device;

}

}

return nil;

}

- (AVCaptureDevice *)activeCamera {

return self.activeVideoInput.device;

}

- (AVCaptureDevice *)inactiveCamera {

AVCaptureDevice *device = nil;

if (self.cameraCount > 1) {

if ([self activeCamera].position == AVCaptureDevicePositionBack) {

device = [self cameraWithPosition:AVCaptureDevicePositionFront];

} else {

device = [self cameraWithPosition:AVCaptureDevicePositionBack];

}

}

return device;

}

- (BOOL)canSwitchCameras {

return self.cameraCount > 1;

}

- (NSUInteger)cameraCount {

return [[AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo] count];

}

- (BOOL)switchCameras {

if (![self canSwitchCameras]) {

return NO;

}

NSError *error;

AVCaptureDevice *videoDevice = [self inactiveCamera];

AVCaptureDeviceInput *videoInput =

[AVCaptureDeviceInput deviceInputWithDevice:videoDevice error:&error];

if (videoInput) {

[self.captureSession beginConfiguration];

[self.captureSession removeInput:self.activeVideoInput];

if ([self.captureSession canAddInput:videoInput]) {

[self.captureSession addInput:videoInput];

self.activeVideoInput = videoInput;

} else {

[self.captureSession addInput:self.activeVideoInput];

}

[self.captureSession commitConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

return NO;

}

return YES;

}

|

拍照,获取静态图片

AVCaptureStillImageOutput 用于捕捉静态图片,执行- (void)captureStillImageAsynchronouslyFromConnection:(AVCaptureConnection *)connection completionHandler:(void (^)(CMSampleBufferRef imageDataSampleBuffer, NSError *error))handler;方法来获取图片

使用 ALAssetsLibrary来将图片储存在照片中

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

- (void)captureStillImage {

AVCaptureConnection *connection =

[self.imageOutput connectionWithMediaType:AVMediaTypeVideo];

if (connection.isVideoOrientationSupported) {

connection.videoOrientation = [self currentVideoOrientation];

}

id handler = ^(CMSampleBufferRef sampleBuffer, NSError *error) {

if (sampleBuffer != NULL) {

NSData *imageData =

[AVCaptureStillImageOutput

jpegStillImageNSDataRepresentation:sampleBuffer];

UIImage *image = [[UIImage alloc] initWithData:imageData];

[self writeImageToAssetsLibrary:image];

} else {

NSLog(@"NULL sampleBuffer: %@", [error localizedDescription]);

}

};

[self.imageOutput captureStillImageAsynchronouslyFromConnection:connection

completionHandler:handler];

}

- (void)writeImageToAssetsLibrary:(UIImage *)image {

ALAssetsLibrary *library = [[ALAssetsLibrary alloc] init];

[library writeImageToSavedPhotosAlbum:image.CGImage

orientation:(NSInteger)image.imageOrientation

completionBlock:^(NSURL *assetURL, NSError *error) {

if (!error) {

[self postThumbnailNotifification:image];

} else {

id message = [error localizedDescription];

NSLog(@"Error: %@", message);

}

}];

}

- (void)postThumbnailNotifification:(UIImage *)image {

dispatch_async(dispatch_get_main_queue(), ^{

NSNotificationCenter *nc = [NSNotificationCenter defaultCenter];

[nc postNotificationName:THThumbnailCreatedNotification object:image];

});

}

|

视频捕捉

使用AVCaptureMovieFileOutput 来捕捉视频

在录制开始时,在文件的最前面会写入一个最小化的头信息,随着录制的进行,片段按照一定周期写入,创建完整的头信息,这样就确保了当程序遇到崩溃或者中断时,影片仍然会以最后一个写入的片段为重点进行保存。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

| #pragma mark - Video Capture Methods

- (BOOL)isRecording {

return self.movieOutput.isRecording;

}

- (void)startRecording {

if (![self isRecording]) {

AVCaptureConnection *videoConnection =

[self.movieOutput connectionWithMediaType:AVMediaTypeVideo];

if ([videoConnection isVideoOrientationSupported]) {

videoConnection.videoOrientation = self.currentVideoOrientation;

}

if ([videoConnection isVideoStabilizationSupported]) {

if ([[[UIDevice currentDevice] systemVersion] floatValue] < 8.0) {

videoConnection.enablesVideoStabilizationWhenAvailable = YES;

} else {

videoConnection.preferredVideoStabilizationMode = AVCaptureVideoStabilizationModeAuto;

}

}

AVCaptureDevice *device = [self activeCamera];

if (device.isSmoothAutoFocusSupported) {

NSError *error;

if ([device lockForConfiguration:&error]) {

device.smoothAutoFocusEnabled = NO;

[device unlockForConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

}

}

self.outputURL = [self uniqueURL];

[self.movieOutput startRecordingToOutputFileURL:self.outputURL

recordingDelegate:self];

}

}

- (CMTime)recordedDuration {

return self.movieOutput.recordedDuration;

}

- (NSURL *)uniqueURL {

NSFileManager *fileManager = [NSFileManager defaultManager];

NSString *dirPath =

[fileManager temporaryDirectoryWithTemplateString:@"kamera.XXXXXX"];

if (dirPath) {

NSString *filePath =

[dirPath stringByAppendingPathComponent:@"kamera_movie.mov"];

return [NSURL fileURLWithPath:filePath];

}

return nil;

}

- (void)stopRecording {

if ([self isRecording]) {

[self.movieOutput stopRecording];

}

}

#pragma mark - AVCaptureFileOutputRecordingDelegate

- (void)captureOutput:(AVCaptureFileOutput *)captureOutput

didFinishRecordingToOutputFileAtURL:(NSURL *)outputFileURL

fromConnections:(NSArray *)connections

error:(NSError *)error {

if (error) {

[self.delegate mediaCaptureFailedWithError:error];

} else {

[self writeVideoToAssetsLibrary:[self.outputURL copy]];

}

self.outputURL = nil;

}

- (void)writeVideoToAssetsLibrary:(NSURL *)videoURL {

ALAssetsLibrary *library = [[ALAssetsLibrary alloc] init];

if ([library videoAtPathIsCompatibleWithSavedPhotosAlbum:videoURL]) {

ALAssetsLibraryWriteVideoCompletionBlock completionBlock;

completionBlock = ^(NSURL *assetURL, NSError *error){

if (error) {

[self.delegate assetLibraryWriteFailedWithError:error];

} else {

[self generateThumbnailForVideoAtURL:videoURL];

}

};

[library writeVideoAtPathToSavedPhotosAlbum:videoURL

completionBlock:completionBlock];

}

}

- (void)generateThumbnailForVideoAtURL:(NSURL *)videoURL {

dispatch_async([self globalQueue], ^{

AVAsset *asset = [AVAsset assetWithURL:videoURL];

AVAssetImageGenerator *imageGenerator =

[AVAssetImageGenerator assetImageGeneratorWithAsset:asset];

imageGenerator.maximumSize = CGSizeMake(100.0f, 0.0f);

imageGenerator.appliesPreferredTrackTransform = YES;

CGImageRef imageRef = [imageGenerator copyCGImageAtTime:kCMTimeZero

actualTime:NULL

error:nil];

UIImage *image = [UIImage imageWithCGImage:imageRef];

CGImageRelease(imageRef);

dispatch_async(dispatch_get_main_queue(), ^{

[self postThumbnailNotifification:image];

});

});

}

#pragma mark - Recoding Destination URL

- (AVCaptureVideoOrientation)currentVideoOrientation {

AVCaptureVideoOrientation orientation;

switch ([UIDevice currentDevice].orientation) {

case UIDeviceOrientationPortrait:

orientation = AVCaptureVideoOrientationPortrait;

break;

case UIDeviceOrientationLandscapeRight:

orientation = AVCaptureVideoOrientationLandscapeLeft;

break;

case UIDeviceOrientationPortraitUpsideDown:

orientation = AVCaptureVideoOrientationPortraitUpsideDown;

break;

default:

orientation = AVCaptureVideoOrientationLandscapeRight;

break;

}

return orientation;

}

|